Debugging models with the bias-variance trade-off

Systematically boosting model performance

Working with machine learning (ML) models is challenging and the whole process involves a cascade of decisions with consequences that are not completely evident at first.

For example, let’s say you have developed an ML model and the performance you observe is below what you want (and need) it to be. Which knob should you turn to boost your model’s performance?

Should you use a completely different modeling approach? Should you use a different set of features or pre-process them differently? Or would it be better to collect and label more data?

Notice that there are far too many possibilities and the mere process of listing all of them is overwhelming.

While seasoned practitioners might have a sense of how to systematically proceed, many data scientists and ML engineers are left half-haphazardly trying one solution after the other, based on trial and error.

Fortunately, it doesn’t have to always be this way. There is one powerful diagnostic that can give specific insights as to what to do next when facing model issues. It is the bias-variance diagnostic, presented by Stanford professor Andrew Ng. As he points out,

[The bias-variance trade-off] is one of those concepts that if you can systematically apply, will make you much more efficient. This is maybe the single most useful tool I found for debugging learning algorithms.

In this post, we first present the bias-variance trade-off, a concept that appears frequently when working with ML. Then, we present the framework to diagnose bias and variance problems. If you are already familiar with the bias-variance trade-off, feel free to skip the next section and head straight to the diagnostic presentation.

Be the first to know by subscribing to the blog. You will be notified whenever there is a new post.

The bias-variance trade-off

Part of the beauty of ML comes from the fact that some concepts have many layers of interpretation, each one providing unique and valuable insights. One of such concepts is that of the bias-variance trade-off. In this section, we briefly explain one way to think about it, so that it is useful for model debugging, but beware that this is one of those ideas that will continue to surprise you year by year, as you gain more practical experience.

As we explored in our blog post on model evaluation, when it comes to ML development, one of the most important quantities that practitioners care about is their model’s generalization capacity. In broad strokes, it represents what their model’s performance would be on new data, other than the one seen during the training stages.

The question that arises, then, is: what are the barriers that are keeping models from generalizing well to new data?

It turns out that by analyzing the expected generalization error, it is possible to decompose it into three components:

1. Irreducible error: the data is intrinsically noisy and we can never expect any model to be perfect. There will always be an irreducible error component keeping models from perfectly generalizing to new data;

2. Bias: the bias component comes from the fact that when we choose a model, we are imposing a hard structure that might be different than the one that generates the data. This mismatch between the modeling approach followed and the true data-generating process gives rise to the bias component;

3. Variance: the variance component arises from the fact that we are training a model on a finite data sample. The training sample that we have is random, so if we trained a model on a different dataset, we would end up with a different model. This illustrates that the model’s parameters themselves are random.

Let’s go through a code example to understand how each of these components interacts. First and foremost, we fix the random seed for reproducibility.

import numpy as np

SEED = 33

np.random.seed(SEED)Suppose we have a regression task at hand, where we want to predict a continuous label y given the input x. Moreover, since this is a toy example, imagine we know exactly how the data is generated: it is simply a 3rd degree polynomial with some noise, given by the following function:

def generate_dataset(num_samples: int = 100) -> np.array:

"""Generates a new dataset of size `num_samples`

"""

x = np.linspace(-1, 1, num=num_samples)

noise = np.random.normal(0, 0.25, num_samples)

y = 2 * np.power(x, 3) + np.power(x, 2) - 0.5 + noise

return x.reshape(-1, 1), yNow, we are interested in fitting a model that maps our x’s to y’s. In our toolkit, we have a linear regression with different polynomial features to choose from. Moreover, we want to understand how the bias, variance, and irreducible error components arise in this context. To do so, let’s create a function that fits a polynomial of a specified degree and then returns the predictions using the model in the training set.

from sklearn.preprocessing import PolynomialFeatures

from sklearn import linear_model

def fit_predict_polynomial(deg: int, x_train: np.array, y_train: np.array) -> np.array:

"""Fits a polynomial of degree `deg` to `x_train` and `y_train` and returns the predictions made by the model on the training set

"""

poly = PolynomialFeatures(degree=deg)

x_train_ = poly.fit_transform(x_train)

clf = linear_model.LinearRegression()

clf.fit(x_train_, y_train)

preds = clf.predict(x_train_)

return predsLet’s now generate multiple datasets, fit the polynomials, and plot the results:

import matplotlib.pyplot as plt

polynomial_degrees = [1, 3, 100]

num_datasets = 5

fig, axs = plt.subplots(ncols=len(polynomial_degrees), figsize=(20,5))

for i, polynomial_degree in enumerate(polynomial_degrees):

axs[i].set_title(f'Polynomial fits of degree {polynomial_degree}')

axs[i].set_xlabel('x')

axs[i].set_ylabel('y')

for j in range(num_datasets):

# generate new dataset

x, y = generate_dataset()

# fit polynomial and make predictions on the training set

preds = fit_predict_polynomial(deg=polynomial_degree, x_train=x, y_train=y)

# plot the results

axs[i].scatter(x, y, c='#e5e5e5')

axs[i].plot(x, preds, linewidth=2.5)

Output:

From the three previous plots, it is possible to identify which components of the generalization error (bias, variance, and irreducible error) are dominant for each model.

The first model is a vanilla linear regression. It doesn’t generalize well because it has a high bias. There is a significant mismatch between a 1st degree and a 3rd-degree polynomial (which is what is used to generate the data). Moreover, it has a low variance, as we can observe by the fact that the line didn’t change that much for each dataset we trained it on.

The second model is a 3rd-degree polynomial, which fits the data just right. Notice, however, that even though there is no mismatch between the data generating model and our model, the fit is not perfect. There is always an irreducible error component because the data is noisy.

The third model is a polynomial of degree 100. It doesn’t generalize well because it has a high variance. A high degree polynomial captures a lot of nuance of the data it was trained on, including the noise. For that reason, when we change the dataset, we get significantly different models, even though each one of them has a low bias.

As we have observed in the code example, there is a trade-off between the bias and the variance components: when we try to reduce one, the other usually increases. This relationship can also be thought of in terms of the constant dance between underfitting and overfitting the training data. High bias models underfit the training data, while high variance models overfit it and it is the job of ML practitioners to find a sweet spot between the two.

Debugging ML models

The idea of decomposing the generalization error into bias and variance components can be applied to the context of debugging ML models. Since one of the quantities practitioners are interested in minimizing is their models’ generalization error, identifying whether the model’s problems emerge from variance or bias is extremely important.

Instead of using trial and error with the possible solutions to model issues (as presented in the introduction), after the bias-variance diagnostic, it becomes clear what type of solution has a high likelihood of working well.

In this section, we present the signatures of bias and variance problems, as well as common solutions to address them, as presented by Stanford professor, Andrew Ng in the CS 229 course.

Identifying variance problems

If a model has a much lower performance on the validation set than it has on the training set, then it is likely suffering from variance issues. In this scenario, the model is overfitting the training data and not generalizing well to the validation data.

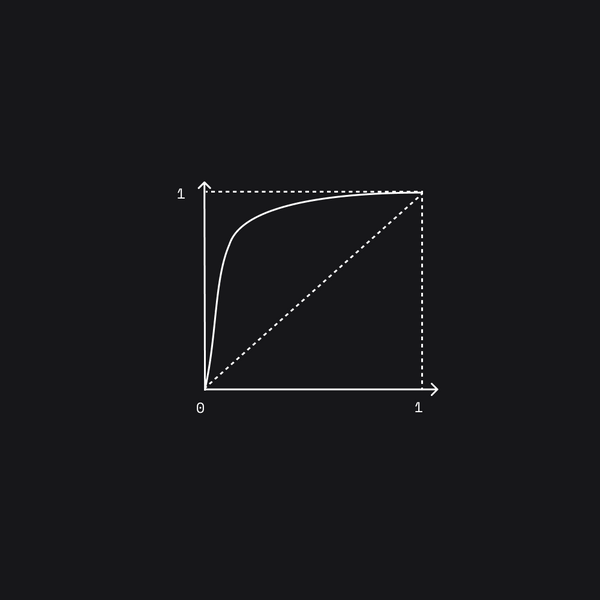

Let’s look at the typical learning curve when this type of issue is present, where the x-axis represents the training set size, while the y-axis is the error.

As the training set size increases (i.e., we have access to more data to learn from), the validation error tends to decrease, as our model learns more about the data. On the other hand, the training error tends to increase with the training set size. This happens because if we have just a few examples (a small training set), our model can almost perfectly fit all the data samples.

The observed gap between the training and validation errors is a signal that this learning algorithm has a variance problem.

Now, identifying that the model is suffering from a variance problem is useful because practitioners have clear indications on how to fix them. It is clear, for example, that getting more training data and decreasing model complexity (either by using fewer features, a simpler model, or by increasing regularization) can all be beneficial.

Identifying bias problems

If a model is performing poorly both on the training set and on the validation set, then it is likely suffering from bias issues. That means that the model might be underfitting the training data.

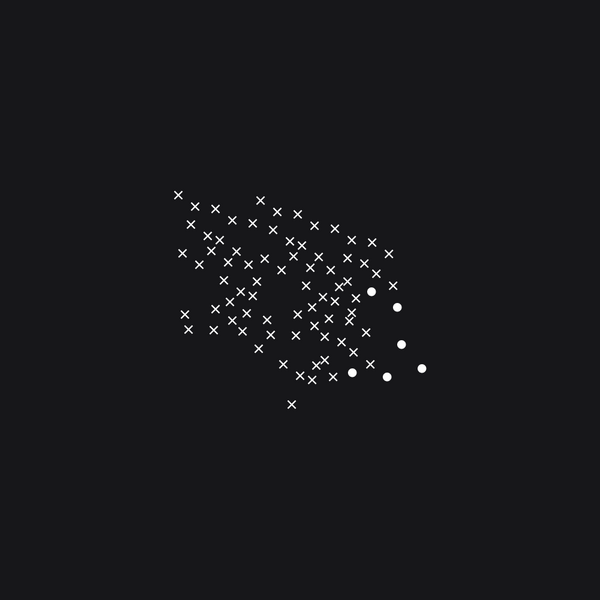

Now, let’s look at the typical learning curves for when there is a bias problem, using the same axis as the previous figure.

In this case, notice that the gap between training and validation errors is gone and both of them are unacceptably high. Moreover, the validation error has plateaued.

By extrapolating the previous graph to the right, it is possible to note that no matter how much more data is collected, the model is likely not going to achieve the desired performance.

Now, identifying a bias problem is useful, because practitioners have indications as to how to fix them. Getting more training data won’t help, but increasing model complexity (either by using more features, a more complex model, or by decreasing regularization) can help.

The bias-variance trade-off appears constantly when working with ML. Andrew Ng points out that knowing in advance if a learning algorithm will suffer from bias or variance problems is difficult. Therefore, his recommendation is to start with a “quick and dirty” algorithm, meaning, a model that is simple to implement, and then run the diagnostics for debugging. For that, you might find our blog post on baseline models helpful.