Model evaluation in machine learning

Understanding the true purpose of model evaluation in the quest for high-quality models

Model evaluation is a fundamental component of the machine learning (ML) development pipeline. Despite its importance, it is probably the part that is most often misinterpreted by practitioners who are too eager to ship their models.

In this post, we start by exploring the true purpose of model evaluation, which justifies why it is taught in virtually every introductory ML class. We then go through an example that illustrates how misleading aggregate metrics can be. Finally, we make an analogy that can help us interpret these metrics for what they really are, avoiding common deceptions.

At the end of this post, you will find pointers to recent and important references, if you want to dive deeper into the topic.

Be the first to know by subscribing to the blog. You will be notified whenever there is a new post.

And hey — if you wish to skip this post and start evaluating and shipping your models right away, check out Openlayer!

Model evaluation and the ML development pipeline

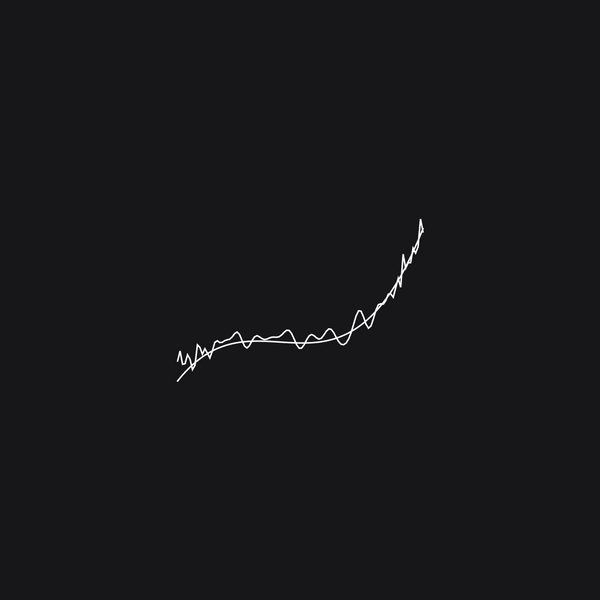

When it comes to ML development, one of the most important quantities that you, as an ML engineer or data scientist, were taught to care about is your model’s generalization capacity. In broad strokes, it represents what your model’s performance would be on new data, other than the one seen during the training stages.

After being developed, the ML model will be deployed in the real world, where it will inevitably encounter new data. Therefore, it is natural that the generalization capacity is something every stakeholder deeply cares about, as the model’s performance out in the wild will heavily dictate whether or not it has achieved its purposes.

But if, by definition, the generalization capacity involves unforeseen data, how could you calculate it?

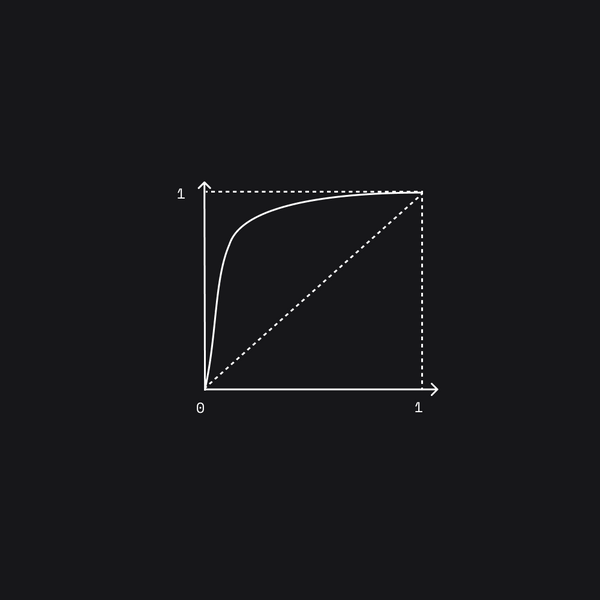

Mathematical statistics gives us possible estimation methods. The use of an independent holdout dataset or of cross-validation (CV) is the suggested textbook approach, as they provide almost unbiased (in the statistical sense) estimators of the model’s true performance on new data.

The simplicity and the theoretical guarantees make their use appealing, to the point where virtually every introductory ML course dedicates at least a few lectures to the topic, particularly emphasizing the importance of properly evaluating models to combat overfitting (which is depicted as the scariest monster there is).

Inebriated by the noble pursuit of generalization capacity, we rarely stop to think twice about what the model evaluation procedures actually provide in terms of information and to what extent can we rely on them. Add to that the fact that we live amid the golden age of benchmarks, where a new state-of-the-art accuracy achieved is read as a synonym of progress, and it is not surprising that practitioners often interpret performance on a holdout set as a proxy of model quality.

The deception of aggregate metrics

Let’s start with a concrete example.

Imagine you have trained a classifier that predicts whether a user will churn or continue using your platform based on a set of features, such as age, gender, ethnicity, geography, and others. Now, you want to know your model’s performance, so you evaluate its accuracy on a validation set. The accuracy you obtain is equal to 90%. That’s great and you are feeling proud of your work!

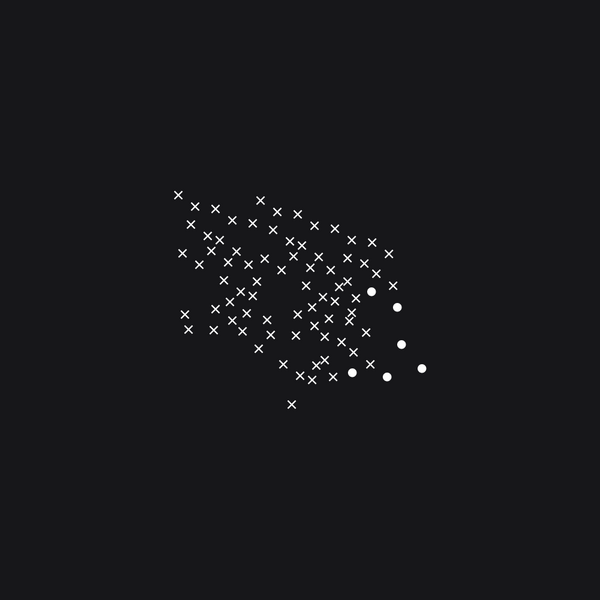

The 90% accuracy, as an aggregate metric, summarizes the performance of your model across your whole validation set. It is a useful first metric to look at, but it doesn’t convey the complete story of how your model behaves.

Is that accuracy sustained across different subgroups of the data? For example, how does your model perform for users aged between 25-35? What about for users based outside the US?

Notice that from a business perspective, the answers to these questions might be very relevant, so you need to be confident that your model is coherent enough to answer them.

What you will most likely find out is that the accuracy of your model is not uniform across different cohorts of the data. Furthermore, you may even encounter some data pockets with low accuracies and specific failure modes.

If you looked only at the aggregate metric (the accuracy, in this case), you would have a myopic view of your model’s performance and think that it was satisfactory. This is why analyzing different cohorts of the data is critical to building trust in your model and not being surprised by failure modes only after your model is serviced in production.

In the previous example, the churn classifier would probably be used internally by a company, for strategic decisions. The consequences of such decisions have the potential to waste precious resources, such as spending time and energy to retain users from groups that would already never churn, but for which the model was predicting the wrong label.

In other cases, when we think about ML systems in production, the user is the one interacting directly with the model.

What happens if you deploy a model that has completely different accuracies for distinct user groups? Part of your users would be very satisfied with your system, while others constantly experience your model making obvious mistakes — or worse, exhibiting biases and behaving in unethical ways.

Ideally, in an application powered by ML, a better model should attract more users, but if by “better” you mean with better performance on a holdout set, the effect can be the opposite!

Lossy compression

The problem is that although the model’s performance on a holdout set or obtained via CV is indeed related to its quality, it, alone, provides a very low-resolution picture of what’s going on.

Aggregate metrics, such as accuracy, precision, recall, and F1, summarize the model’s performance across a whole dataset. They are essentially compressing a lot of information and behaviors into a single number. It is a lossy compression process and relying too heavily on it, ends up being an oversimplification.

A 90% accuracy obtained via cross-validation might look good on a landing page or on an academic paper, but it tells little about what the model has actually learned or how that accuracy translates to different subsets of the data. Furthermore, such a metric shows only a glimpse of how the model will behave in the wild, where it will encounter a long, long tail of edge cases. All of this additional information is lost and cannot be inferred directly from the aggregate metric.

For example, a fraud classifier that always predicts the majority class would have very high accuracy, but that model is useless. Even by changing the aggregate metric to precision, the most pressing questions are still not being addressed: is the company saving money by preventing fraud? Are the customers happy or annoyed with the detection system in place? Do they trust the company’s systems and understand why they're doing particular things?

But lossy compressions are not inherently bad.

In fact, they are a necessary construct that allows us to make sense of the unfathomable amount of information in the world. When working with such constructs, though, it is key that we remember exactly what they represent and critically think about the fundamental limits of their use.

For example, a low-resolution picture is a result of a lossy compression process and is great for instant messaging, but not for a photographer that wants to be featured on the cover of NatGeo. An audiophile might think it’s torture listening to mp3 files with cheap earphones, but a driver can feel joy while listening to the compressed and noisy music on the radio.

Similarly, aggregate metrics on a holdout dataset are good first metrics to look at in the process of constructing a high-quality model, but no one can claim they have a great model just because it has a high accuracy or precision.

Remember what aggregate metrics represent: they inform us how well a specific model is performing on a specific dataset. We need to interpret them accordingly, for what they really are.

Predicting recidivism

The real-world consequences of deploying a model after depositing too much trust on aggregate metrics can be tragic.

Since the late 90s and early 2000s, there are ML models being used to predict recidivism, i.e., models that strive to compute the likelihood of an offender committing another crime. The results produced by these models are often used to support judicial decisions.

This is clearly a high-stakes situation: someone's life might be on the line, one way or the other, as a result of actions taken based on the output of an ML model. But are these models trustworthy?

In a famous case involving the risk assessment software COMPAS, it was discussed whether the model predicted a much higher recidivism rate for black defendants than for white defendants. In terms of accuracy and error rates, the model indeed behaved differently for distinct ethnicities. The aggregate metrics were not uniform across these data cohorts.

Since it was first developed in 1998, the model from COMPAS was used to evaluate more than 1 million offenders. Whether ML models are appropriate or not for the task at hand is a discussion out of the scope of this post. What is unquestionable is the impact that such a model had on the lives of so many people.

Further reading

Model evaluation is a fundamental component of the ML development pipeline, but it is easy to forget about what are its purpose and limitations. Recently, there were important contributions published on the leading conferences raising the flag that the ML field’s excessive reliance on benchmarks might be doing a lot of harm to the community as a whole. If you are interested, check out some of the work done for AI in general, computer vision, and natural language processing (NLP).

This is a topic that we deeply care about at Openlayer, as we build the tooling that helps practitioners evaluate their model’s quality in a much more comprehensive way, by making model debugging, testing, and validation easy and intuitive. Feel free to check out our white paper, where we explore some of these topics from a broader perspective.

* A previous version of this article listed the company name as Unbox, which has since been rebranded to Openlayer.