Understanding and measuring data quality

The key ingredient for high-quality models

Modern companies now unanimously recognize the value of data for driving business growth. However, high-quality data is much more valuable than data assets of poor quality. As companies accumulate petabytes of data from various sources, it becomes imperative to focus on the quality of data and filter out bad data.

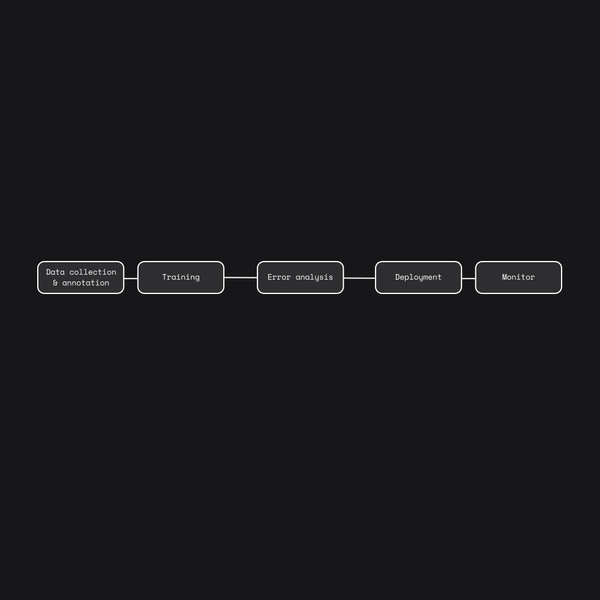

Data is the fundamental building block for predictive machine learning models. Although having access to greater amounts of data is beneficial, it doesn’t always translate to better-performing machine learning models. Sampling training data that passes quality checks and meets certain acceptance criteria can significantly boost the accuracy of the model predictions.

In this article, you’ll learn more about why high-quality data is essential for building robust machine learning models, expanding on the various parameters that define data quality: accuracy, completeness, consistency, timeliness, uniqueness, and validity. You’ll also explore a few mechanisms you can implement to measure and improve the quality of your data.

Be the first to know by subscribing to the blog. You will be notified whenever there is a new post.

What is data quality?

Data quality is a measure of how suitable the data is for its intended applications in data analytics, data science, or machine learning. There are several dimensions along which data quality is measured, which include the following:

- Accuracy

- Completeness

- Consistency

- Timeliness

- Uniqueness

- Validity

Measuring the quality of data in terms of the above parameters is critical for organizations to assess whether their in-house data is suitable for downstream applications.

Why is data quality important?

Data quality is an important determinant of the quality of decision-making within an organization. Poor-quality data leads to inaccurate analytics and machine learning models, which might adversely impact various business operations as well as customer experience. Decisions and business strategies based on flawed data can have massive consequences.

Typical data-quality issues include data security and data that is incomplete, duplicated, inconsistent, incorrect, missing, poorly defined, poorly organized, or stale.

In the context of data science use cases, the consequences of using poor-quality data can be immense—machine learning models trained on low-quality data invariably generate weak or inaccurate predictions, which are not easy to troubleshoot.

Deep-learning models in particular are very data-hungry, and their state-of-the-art performance is driven by the massive amounts of data on which they are trained. In this context, recent work has shown that training models with less data reflects real-world scenarios better and is increasingly becoming the norm.

The cost of bad data to organizations is also enormous—as per an IBM study, the yearly cost of poor-quality data in the US alone is equal to USD 3.1 trillion. Therefore, it is paramount for organizations to invest in proper measurement and evaluation of data quality before building data-driven applications or devising new business strategies.

Determining data quality

Several organizations, from IMF to World Bank, have formulated Data Quality Assessment Frameworks (DQAF) to establish clear guidelines for measuring the quality of data in terms of accuracy, completeness, consistency, timeliness, uniqueness, and validity.

This section will focus on each of these data-quality dimensions and discuss how they define the quality of data.

Accuracy

Accuracy, as the term implies, is a pivotal aspect of data quality—it means that the information is correct. Naturally, inaccurate information can cause many significant problems for a business.

For instance, consider an example in which the time of financial transactions is incorrectly recorded due to a failure to update to daylight saving time. In such a scenario, the timing offset could lead to inaccurate analysis and reporting of core business metrics like daily sales and revenue.

Such data inaccuracies can lead to potentially damaging consequences of incorrect financial and tax filings that could result in financial penalties by regulatory bodies.

Completeness

Completeness refers to how comprehensive the data is and whether it contains all the fields and values necessary to make them fit for the intended purpose. Incomplete data often contains empty or missing values across rows or columns and is unusable for further analysis.

For instance, if a customer’s email address is missing, then this customer may not feature in any marketing campaigns, resulting in a potential loss of business for the company.

Consistency

Consistency is another fundamental trait of data quality, as it can affect the usage of the entire data set. If a data set has millions of records but some rows store a customer’s name as “CustomerName” while the remaining rows store the same information as “FirstName” and “LastName” separately, it might lead to inaccurate results and analysis.

Another common example of inconsistent data is related to the underlying format or units of specific data fields. For instance, data like time is often kept in inconsistent formats, and units of money may be recorded differently from country to country.

Timeliness

Timeliness refers to how recent and up-to-date the information is. For a number of applications, timely data is essential as it captures the current trends and patterns in customer behavior or business health.

Data tends to lose its value over time and can drastically affect the quality of business decisions as well as predictions from machine learning models trained on older data. It can cost organizations lost time and money, in addition to reputational damage.

Uniqueness

Uniqueness refers to the lack of duplication or overlap within a data set or across data sets. Modeling redundant information can often lead to spurious correlations or results that can adversely affect statistical analysis as well as model predictions.

Thus, uniqueness is a critical dimension of data quality that is important to build trust in the data for downstream use cases.

Validity

For several data fields, validation checks are important. For instance, a mobile phone number is usually ten digits long, and zip codes in the US should have five digits. When data does not conform to standard formats or business-specific rules, it is said to be invalid. Invalid data can cause grave errors in downstream analytics and necessitates careful scrutiny of every data column before using it.

Truncation of data also leads to data-validity problems. For instance, a user may mistakenly input six digits for a US zip code, which gets truncated to five digits. While such an input may pass data-validation checks, it is ultimately inaccurate.

Additional sources of data-validity errors arise due to mismatched data formats. For instance, a data type like zip code may be inconsistently saved in numeric or string format.

Improving data quality

There are numerous methods for improving data quality.

The first step often involves data profiling—that is, doing an initial assessment of the current state of the data sets. Defining what is good data is also critical to establishing guardrails around selecting data for further usage.

Furthermore, a number of checks for data validation, completeness, consistency, and timeliness can be defined and have to be met by all current and new data sets.

Data standardization across the organization helps to meet data-quality standards so that every stakeholder across different divisions has the same understanding of the various data sets and fields.

Implementing a robust data governance framework can also help businesses improve the quality of organizational data.

Finally, recent advances in machine learning and deep learning can also be used to identify and improve the quality of data in a more scalable and reproducible fashion. For example, in the deep-learning study, a data-quality assessment framework grounded in statistics and deep learning was used to identify outliers in a data set of salary information published by the state of Arkansas, USA.

As the size of organizational data is bound to increase exponentially in the coming years, companies ought to allocate dedicated resources and investments in new techniques from fields like machine learning and deep learning to measure and provide statistical insights into the quality of their data.

Conclusion

In this article, you’ve learned what data quality is and why it is important for organizations to measure and evaluate the quality of their in-house data. Poor-quality data can have significant consequences for a business in terms of inaccurate analytics, predictive machine learning models trained on bad data, as well as ill-informed business decisions and strategies.

Data quality can be measured in terms of a number of parameters such as accuracy, completeness, consistency, timeliness, uniqueness, and validity. Each of these data-quality dimensions are important, and organizations can improve the quality of their data by having robust data profiling, standardization, and validation checks in place. More recently, advances from machine learning and deep learning can also be harnessed to quantitatively define and evaluate the quality of data.